4 min read

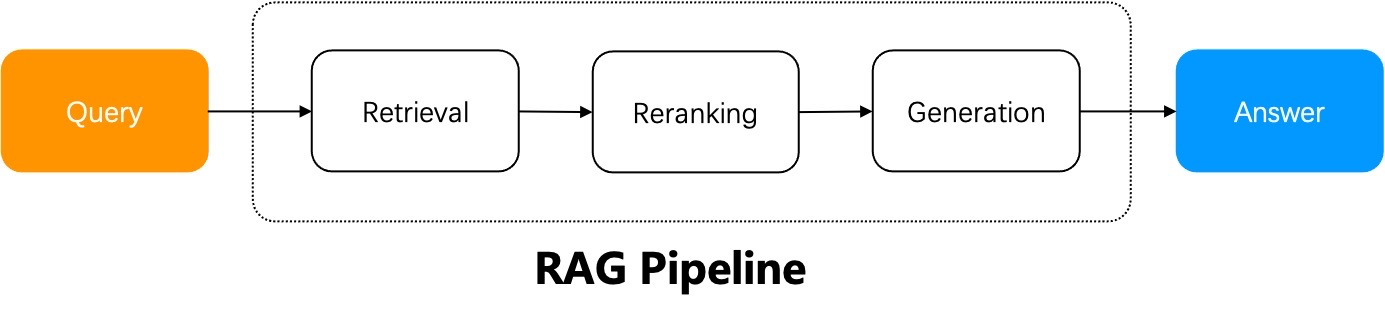

RAGFlow 0.24.0 — Memory API, knowledge base governance and Agent chat history

18 min read

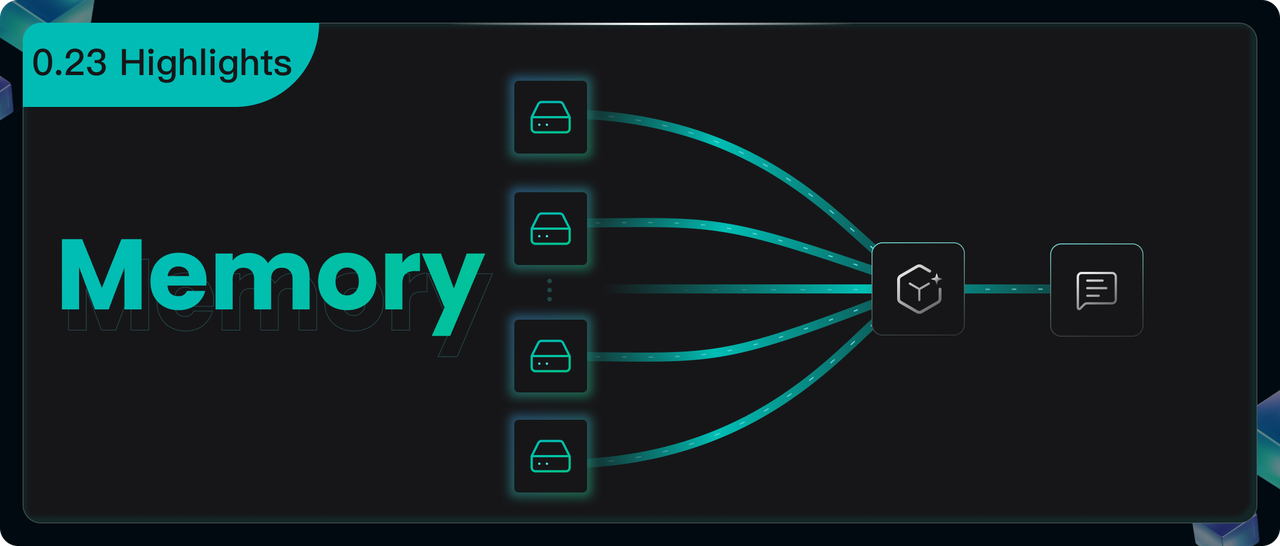

RAGFlow 0.23.0 — Advancing Memory, RAG, and Agent Performance

6 min read

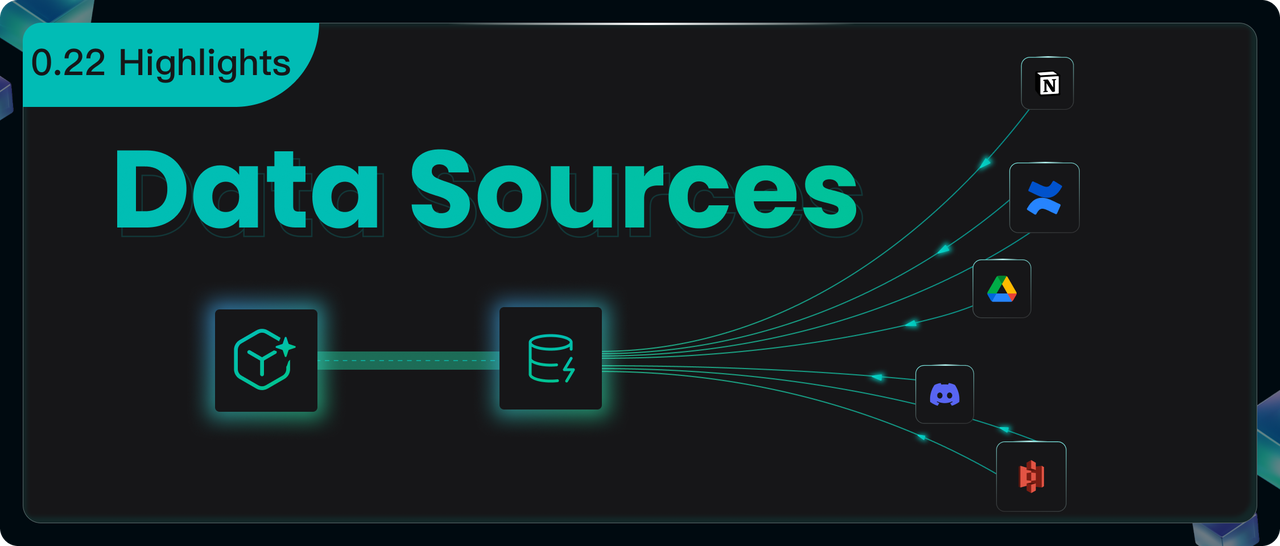

RAGFlow 0.22.0 Overview — Supported Data Sources, Enhanced Parser, Agent Optimizations, and Admin UI

3 min read

RAGFlow Named Among GitHub’s Fastest-Growing Open Source Projects, Reflecting Surging Demand for Production-Ready AI

18 min read

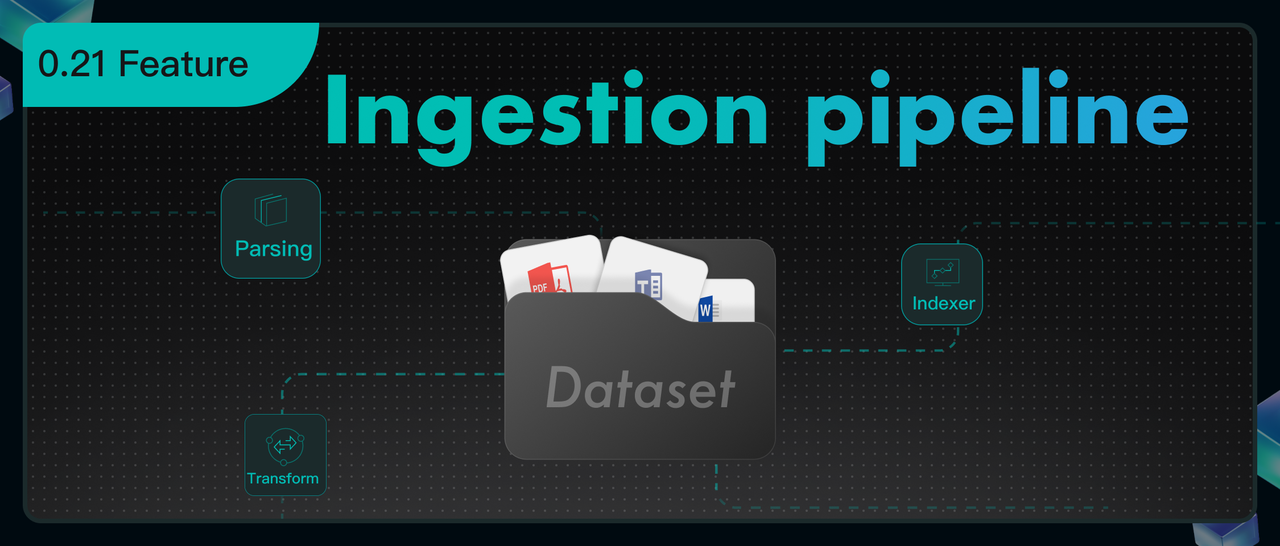

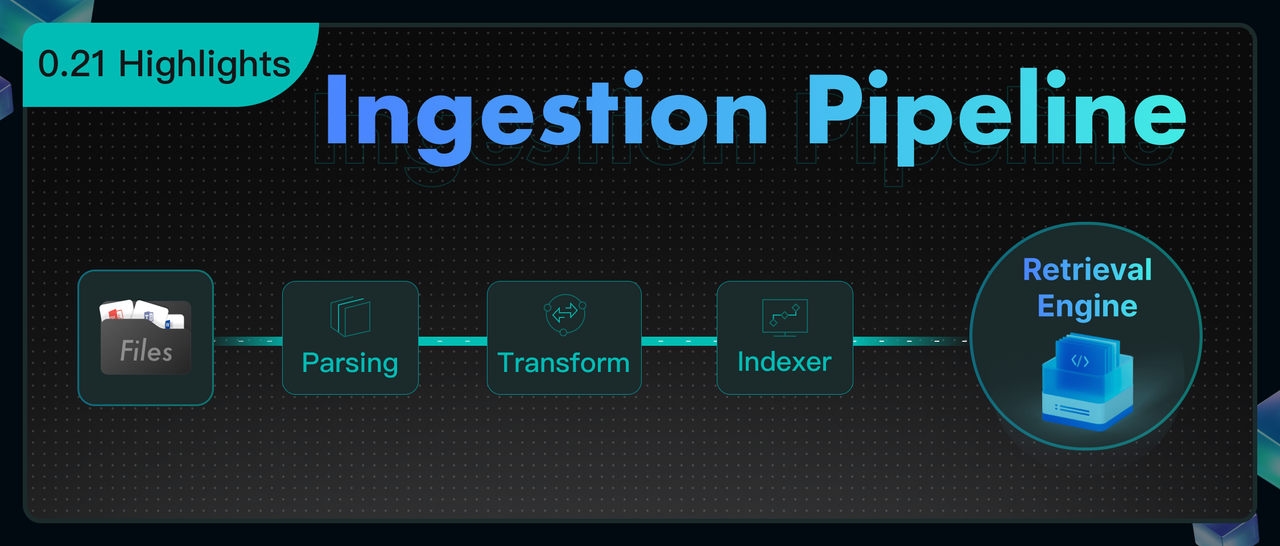

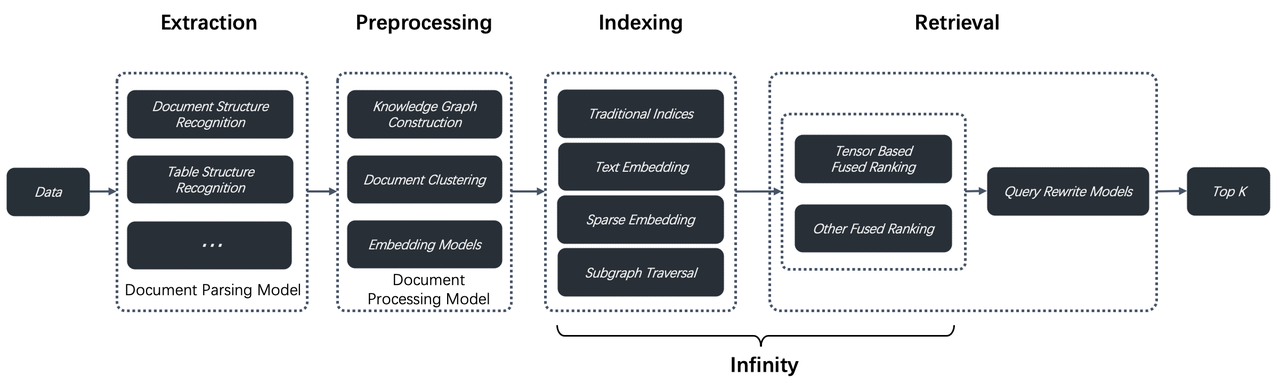

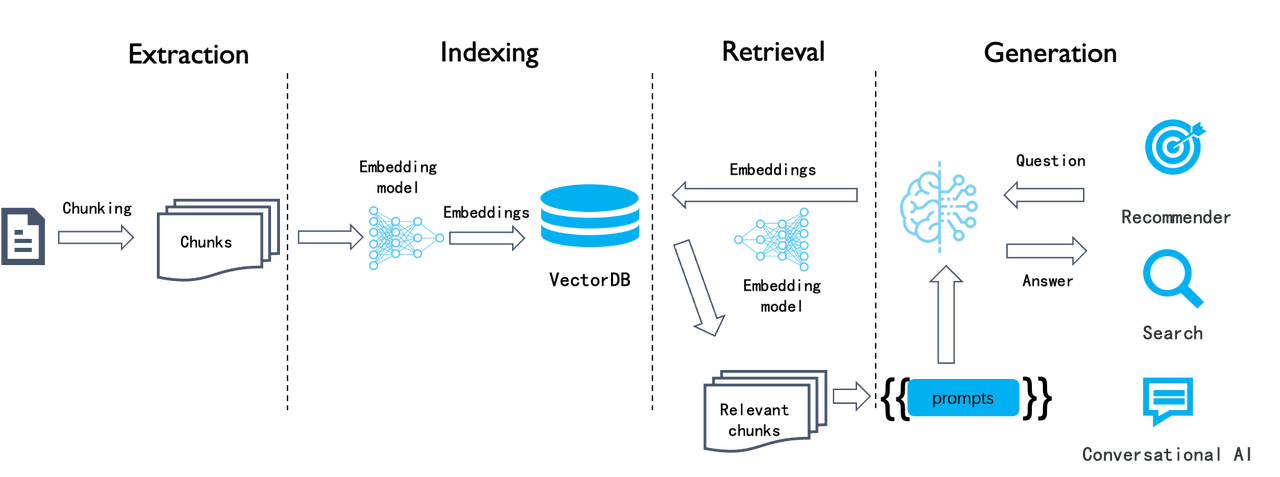

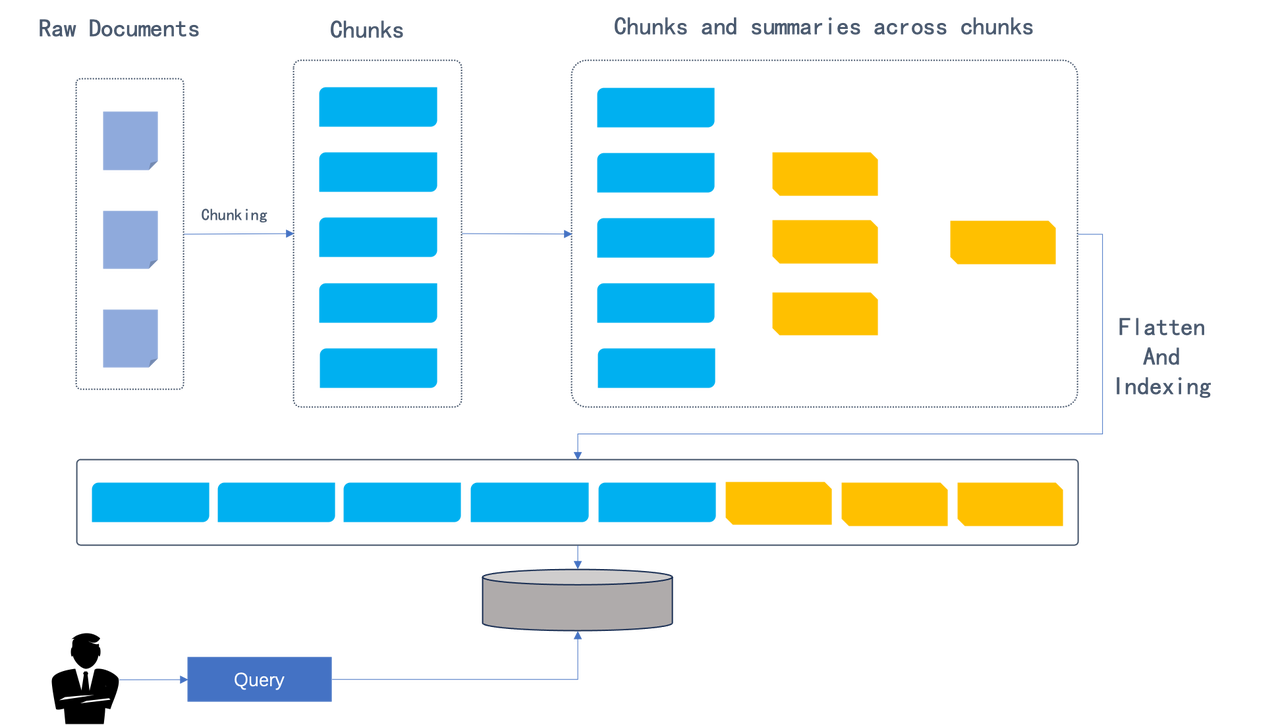

Is data processing like building with lego? Here is a detailed explanation of the ingestion pipeline.

11 min read

Bid Farewell to Complexity — RAGFlow CLI Makes Back-end Management a Breeze

10 min read

RAGFlow 0.21.0 - Ingestion Pipeline, Long-Context RAG, and Admin CLI

13 min read

RAGFlow 0.20.0 - Multi-Agent Deep Research

8 min read

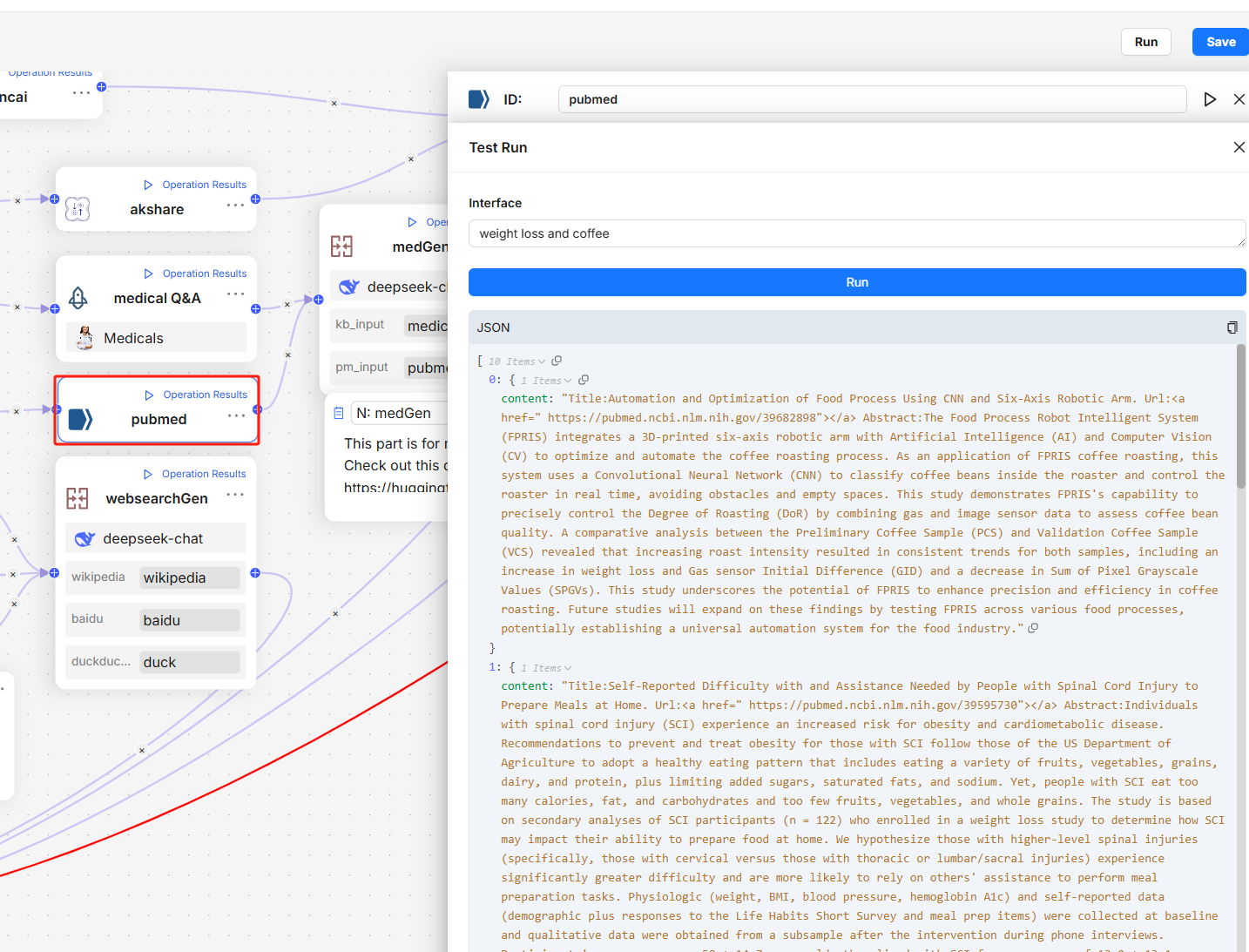

Agentic Workflow - What's inside RAGFlow 0.20.0

6 min read

A deep dive into RAGFlow v0.15.0

5 min read

Implementing Text2SQL with RAGFlow

6 min read

How Our GraphRAG Reveals the Hidden Relationships of Jon Snow and the Mother of Dragons

8 min read

RAGFlow Enters Agentic Era

6 min read

Agentic RAG - Definition and Low-code Implementation

4 min read

Implementing a long-context RAG based on RAPTOR