Six months have passed since our last year-end review. As the initial wave of excitement sparked by DeepSeek earlier this year begins to wane, AI seems to have entered a phase of stagnation. This pattern is evident in Retrieval-Augmented Generation (RAG) as well: although academic papers on RAG continue to be plentiful, significant breakthroughs have been few and far between in recent months. Likewise, recent iterations of RAGFlow have focused on incremental improvements rather than major feature releases. Is this the start of future leaps forward, or the beginning of a period of steady, incremental growth? A mid-year assessment is therefore both timely and necessary.

7 posts tagged with "LLM"

View All TagsA deep dive into RAGFlow v0.15.0

The final release of RAGFlow for the year of 2024, v0.15.0, has just been released, bringing the following key updates:

Agent Improvements

This version introduces several enhancements to the Agent, including additional APIs, step-run debugging, and import/export capabilities. Since v0.13.0, RAGFlow's Agent has been restructured to improve usability. The step-run debugging feature finalizes this process, enabling operators in the Agent workflow to be executed individually, thereby assisting users in debugging based on output information.

What Infrastructure Capabilities does RAG Need beyond Hybrid Search

Infinity is a database specifically designed for Retrieval-Augmented Generation (RAG), excelling in both functionality and performance. It provides high-performance capabilities for dense and sparse vector searches, as well as full-text searches, along with efficient range filtering for these data types. Additionally, it features tensor-based reranking, enabling the implementation of powerful multi-modal RAG and integrating ranking capabilities comparable to Cross Encoders.

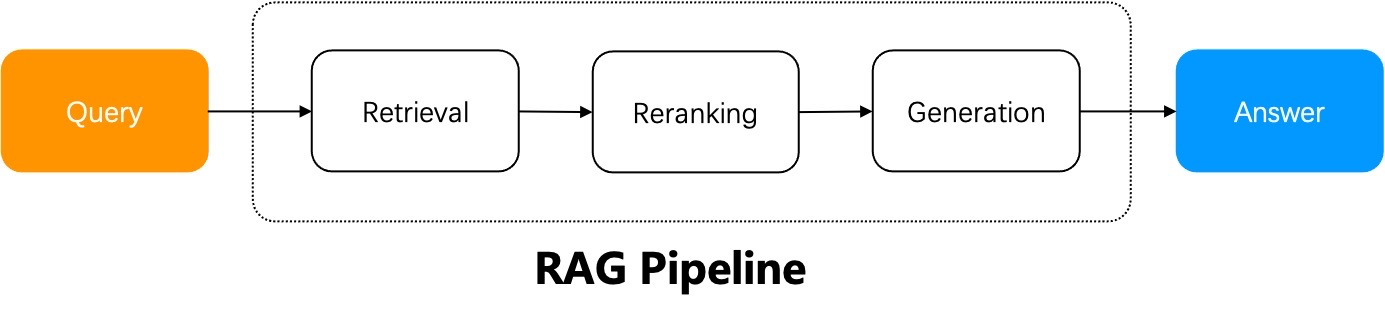

From RAG 1.0 to RAG 2.0, What Goes Around Comes Around

Search technology remains one of the major challenges in computer science, with few commercial products capable of searching effectively. Before the rise of Large Language Models (LLMs), powerful search capabilities weren't considered essential, as they didn't contribute directly to user experience. However, as the LLMs began to gain popularity, a powerful built-in retrieval system became required to apply LLMs to enterprise settings. This is also known as Retrieval-Augmented Generation (RAG)—searching internal knowledge bases for content most relevant to user queries before feeding it to the LLM for final answer generation.

RAGFlow Enters Agentic Era

As of v0.8, RAGFlow is officially entering the Agentic era, offering a comprehensive graph-based task orchestration framework on the back-end and a no-code workflow editor on the front-end. Why agentic? How does this feature differ from existing workflow orchestration systems?

Agentic RAG - Definition and Low-code Implementation

The workflow of a naive RAG system can be summarized as follows: the RAG system does retrieval from a specified data source using the user query, reranks the retrieval results, appends prompts, and sends them to the LLM for final answer generation.

A naive RAG suffices in scenarios where the user's intent is evident, as the answer is included in the retrieved results and can be sent directly to the LLM. Yet, in most circumstances ambiguous user intents are the norm and demand iterative queries to generate the final answer. For instance, questions involving summarizing multiple documents require multi-step reasoning. These scenarios necessitate Agentic RAG, which involves task orchestration mechanisms during the question-answering process.

Agent and RAG complement each other. Agentic RAG, as the name suggests, is an agent-based RAG. The major distinction between an agentic RAG and a naive RAG is that agentic RAG introduces a dynamic agent orchestration mechanism, which criticizes retrievals, rewrites query according to the intent of each user query, and employs "multi-hop" reasoning to handle complex question-answering tasks.

Implementing a long-context RAG based on RAPTOR

RAGFlow v0.6.0 was released this week, solving many ease-of-use and stability issues that emerged since it was open sourced earlier this April. Future releases of RAGFlow will focus on tackling the deep-seated problems of RAG capability. Hate to say it, existing RAG solutions in the market are still in POC (Proof of Concept) stage and can’t be applied directly to real production scenarios.